Using k-means clustering to uncover different types of human behavior

[This blog post is based on a paper I published, in collaboration with my colleagues from Universitat Rovira i Virgili, Universitat de Barcelona, Universidad Carlos III de Madrid, and Universidad de Zaragoza, on the journal Science Advances, back in 2016]

Today, I want to talk about one of my favorite research projects ever.

In this project, we designed and executed a social experiment to try to uncover different types of human behavior in the context of decision making processes when facing payoffs and uncertainty. I love this project not only because it was really fun to be involved in the entire process, but also, because our results somewhat contradict (or at least ask for an expansion on) Game Theory.

Ever since I got my phD in Game Theory on Networks, I have been interested in understanding better how human behavior challenges this theoretical framework. Game Theory, in a nutshell, is a mathematical tool to model human behavior. As a typical example, The Prisoner's Dilemma is used to model how two individuals face the decision between cooperation (C) or defection (D), and it is formalized as a payoff matrix for the four possible outcomes (individual 1 cooperates while individual 2 defects, individual 1 defects while individual 2 cooperates, both cooperate or both defect):

In the case of the Prisoner's Dilemma, T>R>P>S, that is to say, the highest payoff corresponds to a defector that faces a cooperator, then the payoff for two cooperators, next two defectors. And worst-case scenario is when a cooperator faces a defector. Actually, this is a general-form matrix that includes, other games (also called "social dilemmas"), just depending on the relative ordering of those four payoff parameters. I don't want to get into details about each one of the dilemmas here, but you can check out my other post here. Let's just say here that each game or social dilemma corresponds to a different social situation, where the incentives and relative ordering of the payoffs for each choice are also different, that is to say, the most convenient option is different in each game.

If we restrict this huge space of game options, by fixing to of the matrix parameters (R and P), we can focus on a 2-dimensional plane for the other two parameters (T and S). This corresponds to only four different games (Prisoner's Dilemma, Stag-Hunt, Chicken/Snowdrift game, and Harmony Game).

In our experiment, we recruited 541 subjects to play different rounds of this games-decisions, pairing them up randomly with each other. Each subject played between 13-18 rounds (around 20 minutes), which made up over 8,300 individual game choices across the entire T-S game space.

This way, our data set looks as follows: we have a unique ID for each player, and then for each one of her or his game rounds we know the values of T and S (that is, the the specific game conditions), her or his decision at the time (Cooperate or Defect) as well as who the opponent was and what her or his choice was.

|

| Snippet of the data set obtained and used in this project. Actually, you can freely obtain our data here. |

Before I show you the results of our experiment, let me show you how the cooperation heat map should look like, according to Game Theory. I'm gonna color-code the expected value of cooperation of individuals playing the different games in the T-S plane, if they were perfectly rational. Without any political connotation whatsoever, red means 100% cooperation, blue means 0% cooperation:

|

| Expected levels of cooperation in each point of the T-S plane of different games, if the subjects were perfectly rational. |

We observe how in the top-right quadrant (Harmony Game) there is a full cooperation situation (again, go back to this post for more info on the different games), while the bottom-right show zero cooperation, as dictated by the Prisoner's Dilemma. The other two quadrants show an interesting 50% cooperation situation, but however, they are different in nature: while the Snowdrift game (top-right) displays intermediate values of cooperation, the Stag-Hunt game (bottom-left) presents a clear separation between two sections, one of full cooperation, one of full defection. This is because the Snowdrift is a "anti-coordination game", while the Stag-Hunt is a "coordination game".

Ok, so, let's look at our experimental results now:

|

| Experimental levels of cooperation in each point of the T-S plane of different games. |

...Mmmm... not super similar, right? We observe that the top-left is redder, while the bottom-right is bluer... but that's about it. The other two quadrants are just kinda purple mess. Also, it looks like the red part is actually more a triangle than a full quadrant...

What do we do now to understand our results? Remember that we are trying to say something about different types of human behavior in different game conditions.

At this point, in our team there were actually two opposite approaches proposed. Some of us thought that we could try to look for known behavior patterns in the data: for example, it is known that some people always cooperate, no matter the situation, and others always defect. The other proposed approach (the one I championed) was to let an Game-Theory-agnostic algorithm classify the data on our subjects, and see if the results were interpretable within the Game Theory framework. This approach felt more interesting to me, and more capable of uncovering unknown patterns.

After some research about different clustering and classification algorithms, pros and cons, I decided for the k-means algorithm. The reason being that, in a problem like this, you don't really know the ground truth (you don't know what is the actual type of behavior of each person, nor how many types are there), and you only have a number of features about each individual (in my case, their decisions in each game) that hopefully can be used to define groups. Also, I wanted to keep things simple (after all, the paper was not a throughout study on the performance of different algorithms given my data, but instead novel computation social science paper that combines a social experiment, Game Theory and machine learning in an interesting way).

I will get into some of the computational details later, but let's look at the results from the k-means algorithm (k=5):

We present the resulting five clusters, where a person belonging to a given cluster is a thin vertical line, colored according to her or his average actions in each one of the four games.

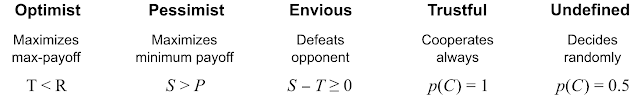

The five groups (we named them, to make it more convenient) display obviously very different behaviors in each one of the social dilemmas: The ones we call "Envious" seem to cooperate only in the Harmony game, and defect in all others, the "Pessimist" cooperate in the Harmony and the Snowdrift game, and defect in the Prisoner's Dilemma and the Stag-Hunt. The "Optimists" seem to like to cooperate in the Harmony and the Stag-Hunt. The "Trustful" cooperate everywhere, and finally, we have a small group of people that seem to play randomly (this could also be due to sample size limitations).

This results seem to make sense, but let's see if we can learn a bit more about each group. In order to do that, we plot a separate cooperation heat map plot for each cluster:

One could argue that it looks similar to this, right?

This idealized behavior of each group can easily be describe using the parameters in the payoff matrix! This way, we can describe what is the general motivation for a typical individual in each group:

Now, let me tell you that at this point, I was super excited! This is the reason why:

Within the framework of Game Theory, there is usually the assumption that individuals behave rational (that is, they try to maximize their benefits), while anything else is labeled as "irrational". In our study we find multiple interesting results: First, nobody seems to behave like the theory would dictate (notice that none of the five experimental cooperation heat maps, nor the aggregated one, look that the expected cooperation heat map I showed at the beginning!). Second, machine learning can help us classify people into well-defined types of behavior. And third, even if an individual acts non-rationally (according to Game Theory), you can still learn a lot about the rules that guide her or his behavior: that is, irrational it is not the same as random!

Now, for those of you interested in some technical details.

First of all, how do we know that 5 is the right number of clusters? The K-means algorithm finds the best partition of your data into K clusters (by minimizing the dispersion of values among elements in a cluster, and maximizing the distance of clusters' centroids). But it needs the proverbial K value entered as an input, so how did I know what number to input? I didn't, I just tried all values from 2 to 20, and calculated the Davies–Bouldin index for each case. This index is used to evaluate the performance of a given clustering scheme, using only features of the datas et, and it reaches its minimum value at the best partition. Plotting the average value of this index over 1,000 independent realizations, vs the number of clusters, we find that K = 5 is the optimum number (black line in the figure below):

Moreover, we also check that a randomized version of the data does not display any cluster structure (red line in the previous figure). Also, to account for the learning process that all players are assumed to undergo, we run the same analysis excluding the first two rounds for each one, obtaining basically the same results as when including all data.

Another robustness check we do, is to run the same clustering analysis, but leaving out a random fraction of the data each time, to observe whether we can still retrieve the cluster structure:

We observe how the cluster structure (that is, a minimum value at 5) is preserved when removing up to 300 random subjects (note that the total number of subjects in our study is 541). If we remove more than that, then the DB_Index doesn't display any minimum, indicating a lack of structure.

Comments