Understanding the adoption of innovation by medical professionals

This post is based on a paper I published in 2014 with my colleagues from Northwestern University. In that paper, I explored possible underlying mechanisms driving the adoption of medical innovation among a group of physicians at the Northwestern Memorial Hospital in Chicago.

The reason why I care about this topic is simple: it turns out that the adoption of medical innovation takes way longer than what one would ideally wish. According to some studies, it can take up to 17 years since a medical technique is approved, until it becomes common practice! I would like to contribute to the study of this phenomenon, in hopes of helping accelerate it, so people can benefit from medical advances as soon as they are available, and not a minute later!

As a researcher, this was also a really fun project for me, because it included two distinct components: a small social experiment and a computer simulation part. The idea was to first observe and gather data on a real-life situation where a new medical technology was introduced for the first time, and then to use a computer to try out different scenarios (models) for the individual-level behavior of the subjects in the study. Finally, I wanted to try to determine which one of the models matches better the empirical data we gathered.

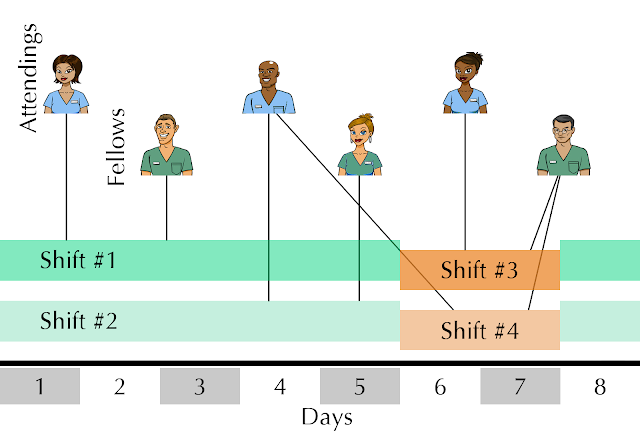

Because these type of processes are better describe when including the social structure of the individuals involved, we use a temporal-network approach, as the base for the computer simulations. For this reason, that is the first type of data we collected: complete information of who is working with whom in the hospital department, for an entire year (see figure caption for more details).

This way, if we plot the entire year worth of shifts, it would like something like this:

|

| Temporal network representation of the shifts of physicians working together. One attending (red) and one fellow (blue) are connected if they work together in a given shift (green) during the year. |

The second piece of data that we need, is the overall number of adopters over time. We will use it as benchmark to compare the results of our different simulation models:

We observe that after the first "seeding" of the innovation in the system (which was the event when we selected two physicians and told them about it), the number of adopters reminds constant for over two months, and only then it starts increasing. The final number of adopters achieved is 20 (55% of the population).

The next step is to simulate different possible models for how medical innovations spread on the actual network. We are gonna focus on two different families of models: infection, and persuasion. In certain contexts, it is common to think of the spreading of information as the 'spreading of a virus', and that is why we will try this first model to see if it fits our data.

- In particular, the infection model we implement is an SI model, where individuals are in one of two possible states: Susceptible or Infected. A Susceptible individual in contact with an Infected one has a probability p(infection) of getting infected. The process starts with two infected individuals, everyone else being susceptible (to replicate the social experiment). Also, individuals have a probability p(immune) to be immune from the beginning, hence, never getting infected when in contact with an Infected. People interact with each other according to the shifts (temporal network), and the infection propagates. We stop the simulation after the equivalent of a year. This model has two parameters (p(infection) and p(immune)), and we need to explore the performance of it for each pair of values: that is to say, we run simulations to obtain the average time evolution curve for each possible pair of values of the parameters of the infection model, comparing it with the actual time evolution of the number of adopter physicians. More on this comparison between the infection model and the actual data later.

- The second model that we test in this work is called 'persuasion model' and is similar to a Threshold model. We pick this model because we think represents better the type of interactions between physicians at a teaching hospital: every individual has an initial opinion (coded as a float number between 0: totally against it, and 1: totally in favor of using it), and as a result of a working interaction, both agents will update their strategy by moving closer to the other person by a given amount. This model has potentially more parameters than the infection one, but by restricting certain aspects of it, we also have only two: The 'threshold' for becoming an adopter (a value close to 1 means that it takes generally more convincing for the individual to adopt, while if the value is close to 0, she or he will adopt sooner), and the 'persuadability' (how much does an individual move after one single interaction). Again, we will explore the parameter space, comparing the average time evolution curve for the number of adopters in the simulation vs the actual data. This way we will study the performance of the model, and also, we can compare both models against each other.

A little bit about how to pick the set of parameters in a model that gives the closest average time evolution curve to the actual adoption curve. This is actually just an optimization problem, where the magnitude that needs minimizing is the distance between both time evolution curves. Because the system is not very complicated, I can just divide the space of parameters into a grid, and explore the values for each point. Then I just pick the optimum.

Below I plot the exploration of the parameter landscape for both the infection and the persuasion models (Note that I am representing the 'quality of fit' in the Z axis, as opposed to the raw distance, so I am looking for a maximum, not a minimum).

|

| Parameter landscape and quality of fit model-actual data for the Infection model. Maximum located at: P(immune) = 0.3, P(infection) = 0.3. |

|

| Parameter landscape and quality of fit model-actual data for the Persuasion model. Maximum located at: Opinion threshold = 0.5, Persuadability = 0.1 |

These are just plots that I like (not included in the main paper itself), because I have always been interested in the idea of parameter landscapes and how optimization explores them, but you can read more about model selection, including BIC on my paper.

Once we locate the maximum (that is, best performance spot for each model), then we can plot the average time evolution curve vs the actual curve, and see how they actually did:

|

| Performance of the best set of parameters for the Infection ('Contagion simple' and 'multiple dose', pink and red), and Persuasion models (blue), along with the empirical adoption curve (black), for comparison purposes. More details about the models here. |

Actually, in the previous figure I am showing multiple Infection models (some a bit more sophisticated than the one described earlier), but none of them are able to replicate the empirical curve very well. However, the persuasion model does a much better job.

Instead of just seeing whether my models are able to replicate my empirical data, now I want to evaluate the performance of both models in a different way: I want to see if I can train the models to predict the outcome of the adoption process. In order to do this, I use the first half of the empirical time evolution adoption curve to train a model, obtain the set of parameters that best fit that half of the curve, and then test how well the model performs, by letting it run for the rest of the simulation time and compare with the second half of the empirical time evolution curve. Again, by measuring the distance between the (second half of the) average time evolution vs the empirical curve, we can determine which model is able to predict more reliable how the adoption of innovation is going to evolve in our system:

We observe how the persuasion fits the second half of the data much better than the contagion (infection) models, which always underestimate the amount of adoption in the system (you can read about the statistical testing for these comparisons on my paper).

The last step on my research project was to explore the impact of potential interventions in the medical setting, to boost adoption. We did this only using computer simulations. We took the best model we have (the persuasion model), setting the values of the parameters also to the best value obtained previously, and then we implement different types of "adoption boost", so see which one would be more efficient. That is, we wonder which intervention would render the highest number of adopters during and the end of the observation process (but also, to be cost-efficient, we will try to minimize the total number of interventions). We simulate an intervention as a physician getting her adoption state boosted by an external agent (for example, some other physician reminding her or him to adopt this innovation), and then continue the simulation. Our results indicate that a single, small intervention during a period when few or no adopters are working, can significantly increase the final number of adopters in the system.

Comments